DRAM-Less SSD are more cost-competitive by introducing HMB.

Solid State Drive (SSD) is a storage device that uses NAND flash memory as a storage medium. Thanks to the physical characteristics of NAND Flash, SSD has many advantages such as low power consumption, low noise, light weight, anti-vibration and high efficiency, compared with the traditional hard drive. Therefore, the shipment of SSD in the storage market has been increasing in recent years.

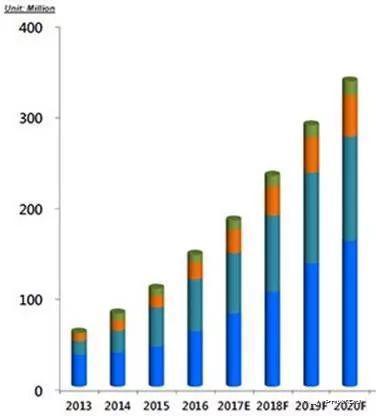

According to a report from DRAMeXchange, a semiconductor storage device research institute, the tendency of SSD shipments is still high-end today, especially in the client SSD market, and its growth is expected to continue until 2020, as shown in figure 1.

Figure 1 statistics and estimates of SSD shipment /data source: DRAMeXchange(2017)

SSD interface continues to evolve with PCIe scalability better than SATA

The physical interface of SSD mainly supports Serial Advanced Technology Attachment (SATA) and Peripheral Component Interconnect Express (PCIe). SATA Gen3, with 6GT/s of theoretical transmission bandwidth, is the most popular SSD transmission interface in the current market. In the past few years, compared with traditional hard drive, the data transmission bandwidth of SATAGen3 has obvious advantages, leading to the market share of SSDs rising year by year.

In the meantime, process and related technologies about NAND Flash are constantly evolving. The transmission interface standards of NAND Flash and controller have greatly improved the speed from Legacy Mode to Toggle2.0/ONFI 4.0. As a result, the most popular SATA Gen3 with its low theoretical-bandwidth in the past has become a major bottleneck in the development of SSD, while PCIe SSD has developed rapidly.

PCIeGen3 can not only provides theoretical bandwidth up to 8GT/s (1-lane), but also provides excellent scalability because the theoretical transmission bandwidth will multiply with the number of lanes. At present, when the PCIe interface can be extended from 1-lane to 16-lane, its transmission bandwidth is much higher than SATA.

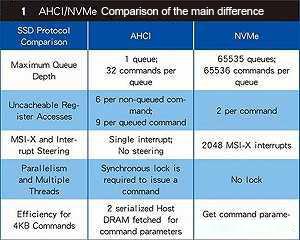

In addition to the physical interface support evolution mentioned above, the transmission protocol between the SSD and the host, in response to the rapid development of NAND Flash, has gradually moved toward Non-Volatile Memory Express(NVMe) instead of Advanced Host Controller Interface(AHCI). Table 1 briefly shows the main differences between AHCI and NVMe.

There are several major factors that can directly affect the sales volume of products in the client market.

Price

In general, NAND Flash is the most expensive component of SSD. With the SSD configuration of different manufacturers, the cost of NAND Flash can reach 80%~95% of the overall SSD bill of material (BOM).

However, NAND Flash is the main storage configuration of SSD and is indispensable. Therefore, if you want to save costs to reduce the price, it is a way to remove unnecessary components of SSD.

Dynamic random access memory (DRAM) will be removed by the manufacturers at the first time. In a rough view, a 4Gb DRAM chip costs about 3~4 dollars. Obviously, if DRAM can be removed from the SSD's BOM, it will be quite helpful for the cost and price.

Power consumption

Power consumption is another major consideration for SSD vendors, especially for SSD products that target at PC OEM. Generally, SSDs will take up about 5% ~ 10% of power consumption of a portable device.

If SSD can reduce power consumption, the overall power budget can be left to the rest of the components, further extending the battery life of portable device.

Reliability

As a storage device, the reliability of SSD is bound to be the most important consideration for the user. Although various error detection and error correction techniques are increasingly developed today, the possibility of losing or distorting data can be further reduced if DRAM can be removed.

Performance

Even though all above factors are the main considerations of SSD manufacturers and users, performance still strongly influences consumers' willingness to purchase SSDs. If an SSD does not have DRAM as a cache, its performance must be significantly affected, which is why DRAM-Less SSD is not popular in the PCIe SSD market today. Consumers who purchase PCIe SSD products mostly need high-speed access, but removing DRAM is a discount to the overall performance of SSD.

HMB helps DRAM-Less SSD improve performance.

Fortunately, NVMe Association captured this trend in the client SSD market. In 2014, the NVMe specification v1.2 developed the Host Memory Buffer (HMB) to improve the overall performance of the DRAM-Less SSD. It’s expected that this client SSD solution can further achieve a balance between price and performance.

Host Memory Buffer, as its name implies, is a mechanism for the host to provide memory resources that are not required at present to the SSD through NVMe protocol. In this way, DRAM-Less SSD can obtain additional DRAM resources as a cache to improve performance without configuring DRAM. When the SSD obtains the host’s additional HMB resource under certain circumstances, what information does the SSD controller (Controller) place in this area? Objectively, this is determined by the firmware built into each SSD controller.

However, given previous implementation of SSD products, the Logical to Physical Address Translation Table (L2P Mapping Table) is the system information most likely to be placed in the HMB.

Simply, L2PMapping Table is used to record the mapping relationship between logical page position and physical page position. Regardless of being read or written, SSD will need to access a certain amount of L2P mapping information. Therefore, it seems reasonable to put L2P Mapping Table into HMB to reduce access time and improve the SSD access speed.

How can the performance of DRAM-Less SSD be affected after the actual introduction of HMB design? The following is the overall performance trend through a simple computational model (4-channel DRAM-less SSD controller + transmission interface: PCIe Gen3 2-lane + 3D NAND Flash).

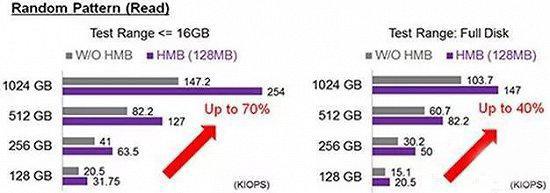

In order to simply show the difference in performance with HNB turned on, let’s assume that the HMB size which the SSD can get from the Host is fixed at 128MB. As shown in Figure 2, we can clearly see that the performance in the sequential read and write operation is not much improved after opening HMB. Considering that HMB is still essentially volatile memory, the proportion of space used to store user’s read and write data is usually not too high, and most of them are used as caches for storing L2P Mapping Tables (Implementation will vary depending on the design of each SSD manufacturer).

Figure 2 Comparison of sequential read and write efficiency before and after HMB is turned on

Overall performance improvement is not easy. HMB design has a mystery

In general, the time to read data from RAM is much less than that from NAND Flash (nsvs. us). So if we can improve the hit rate of L2PMapping Table in HMB by appropriate algorithm design, we can improve the overall performance to a certain extent.

In the sequential read and write operation, since the data read and written by the user is continuous, the mapping relationship at the logical-physical level will also exhibit a continuous distribution. Thus, the L2P Mapping Table does not need to be frequently retrieved from NAND Flash to HMB.

In other words, the chances of retrieved L2P Mapping Table is very low in the sequential read and write operation, because the hit rate of the L2PMapping Table must be very high. As a result, it’s sufficient to store a small amount of L2P Mapping Table depending on the controller embedded static random access memory (SRAM) with high cost and small capacity, which is why HMB can store more Tables without much impact on overall performance.

When the user performs a random read, the next data position to be processed is unpredictable for the SSD controller, that is, the hit rate of a small amount of L2P Mapping Table temporarily stored in the SRAM is significantly lower than in sequential read and write operation.

In this case, if you can obtain additional cache space to store more L2PMapping Tables, thereby increasing the hit rate and suppressing the frequency of retrieving information from NAND Flash, the random read performance can be significantly improved.

According to this simple calculation model, even for random reading of the entire SSD storage space (Full Disk), the performance improvement based on HMB can even reach 40%. Besides, we can observe another trend: the performance improvement caused by HMB will be more significant as the overall SSD capacity increases.

Figure 3 Comparison of 4 KB random reading efficiency before and after HMB is turned on

This is because when the user performs random reading in the case of sufficient test data, the larger DRAM-Less SSD capacity is, the Mapping Table stored in the cache will have a lower hit rate under the same SRAM size configuration. If the HMB resources released by the Host to the SSD can be obtained, the appropriate Flash Translation Layer (FTL) architecture design can enhance the performance to a greater extent.

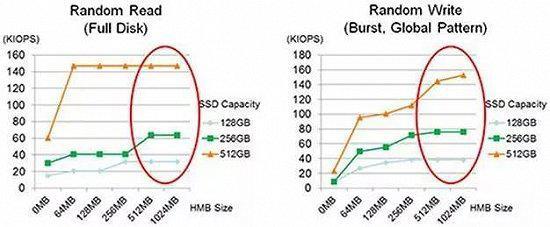

Figure 4 shows the performance improvement trend of HMB for random writes of 4KB data based on the same DRAM-Less SSD estimation model. For SSD controllers, writing is more complicated than reading.

Figure 4 Comparison of 4 KB random writing efficiency before and after HMB is turned on

If there is not enough cache space to store the L2PM Mapping Table, the controller will need to access the NAND Flash block more frequently in case of random write to obtain every L2P Mapping Table information for each current write.

In addition, the time spent writing data to NAND Flash is generally much longer than the time spent reading data (ms vs. us), so if SSD is not configured with DRAM for cache, the performance of random writing will be greatly affected.

If DRAM-Less SSD is designed to support HMB and then acquire additional DRAM resources from the Host, you can get more significant benefits.

With the same estimation model, the DRAM-Less SSD supports the HMB mechanism for random write testing of the entire SSD storage space (Full Disk), and the performance improvement can be even 4 to 5 times.

HMB size affects reading and writing performance, so SSD design must be more comprehensive.

The above DRAM-Less SSD performance trends are based on the assumption that the devices can continuously obtain a fixed 128MB dedicated memory resource from the Host.

However, the actual HMB size is dynamically allocated by the Host according to the current memory usage and the requirements of SSD. If the memory resources available at the host do not meet the requirements of SSD, the SSD will not use this HMB.

Therefore, when designing the controller firmware of DRAM-Less SSD, it is better to consider more than one HMB size, so as to improve the probability of using HMB and optimize the user experience.

Based on the same estimation model, Figure 5 shows the results of a 4KB random reading and writing when the size of the HMB is from 0 (without HMB) to 1024 MB.

Figure 5 Effects of different HMB block sizes on 4KB random reading and writing performance

We can observe that although the approximate performance trend is better with the increase of HMB size, the performance of the SSD will start to approach saturation when the HMB resources are enough. This is because when HMB is sufficiently large, the SSD controller can put all the required system information (including the L2PMapping Table) into the HMB for reading and writing operations.

In this way, DRAM-Less SSD performance can be seen as an optimization (theoretically equivalent to the native SSD that configures the same DRAM resources). Therefore, when designing the firmware architecture, SSD controller vendors need to consider what kind of system data structure can allow DRAM-Less SSD to reach the performance saturation point.

In other words, it’s a decisive point of PCle DRAM-Less SSD product online for each SSD controller manufacturer how to design a set of data structures in the DRAM-Less SSD to support the HMB system, taking into account the access speed and memory resource consumption, and how to optimize the HMB size that is easier to allocate to the SSD from the point of view of statistical probability.

While the performance improvement is the original intention of HMB, after talking about the performance part we still need to go back and pay attention to the most basic requirement for all storage devices: Data Integrity.

Let us think of a question: Is it possible for SSD controllers to have 100% full trust for all information? In the ideal situation, the answer is yes; but in practical use, we still recommend a security mechanism with sufficient strength.

When DRAM-Less SSD is allocated to a HMB resource, what kind of information will be stored in HMB? In fact, the answer will vary with the firmware design of each SSD controller. However, Data Buffer, L2PMapping Table, or the system information required by the rest of the controller are all possible options.

Some of the information is very important or even impossible to reconstructed for the SSD controller. Therefore, when the NVMe launches the HMB, it requires the supported HMB SSD controller to be able to guarantee the data integrity in the SSD without turning off the HMB function.

In addition, unexpected or even illegal host-side access or distortion during data transmission may affect the information stored in the HMB. How to ensure the integrity of data stored in HMB has also become a topic of PCIe DRAM-Less SSD.